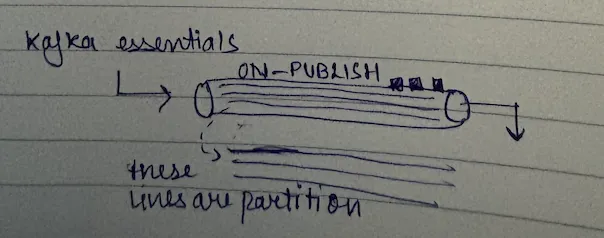

Kafka Essentials

Kafka is a message stream that holds the messages. Internally Kafka has topics.

Every topic has 'n' partitions. Message is sent to a topic and depending on the configured hash key, it is put into a partition.

Within partition, messages are ordered → no ordering guarantee across partitions.

When you increase number of partitions, existing messages stay as is - no messages would be sent to them.

Key Kafka Concepts

Message Distribution: When you send a message to a topic, the message is sent to one of the partitions based on:

partition = hash(partition_key) % number_of_partitions

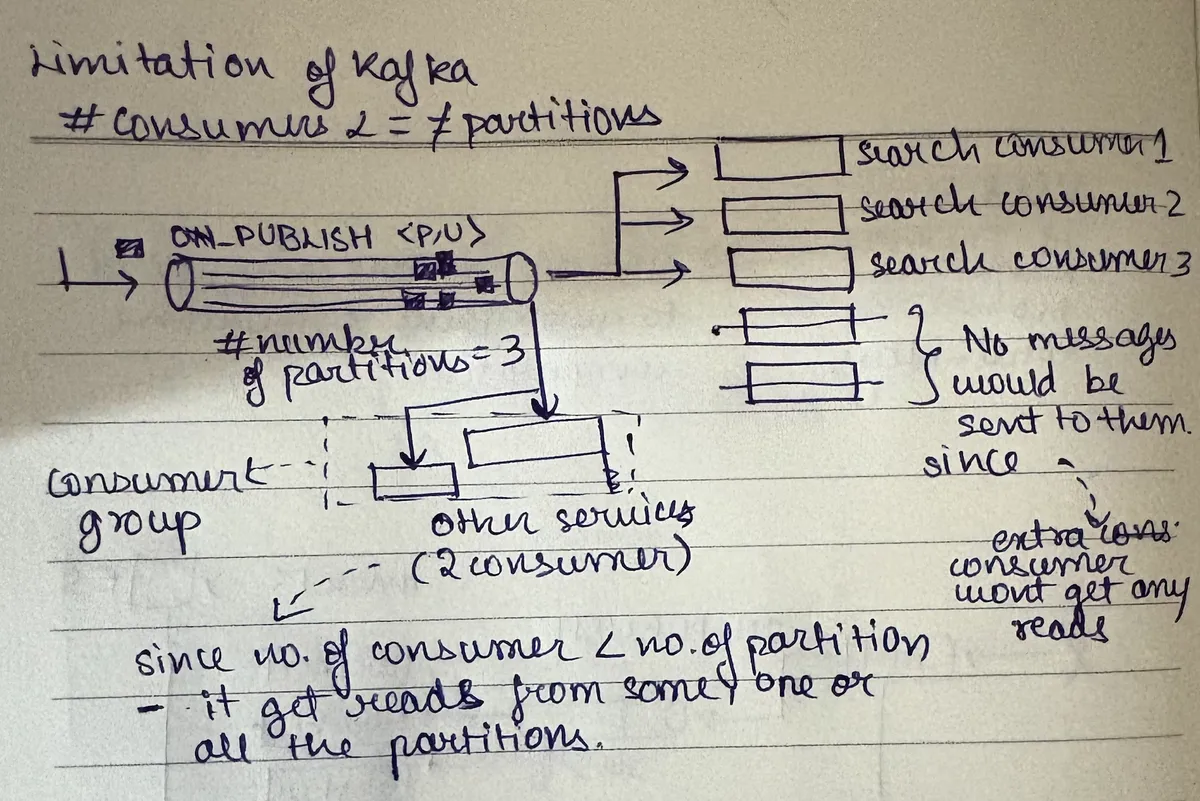

Limitation of Kafka: You cannot reduce the number of partitions (proposal for more consumers).

number_of_consumers <= number_of_partitions

For more consumers, you need more partitions:

- If you have 3 partitions, you can have at max 3 consumers in a consumer group

- If you have 3 partitions but only 2 consumers → one consumer would own 2 partitions, another would own 1 partition

- If you have more consumers than partitions → extra consumers would not get any messages

When you increase partitions:

- You cannot reduce them again - so when increasing partitions make sure you take a number that's not too much, not too less for your current scale

- Existing data is not rehashed - the new partitions are created empty, hash function changes so new messages might go to new partitions

Gets records from one/some/all partitions (assigned).

Ordering Guarantees

- No global ordering across partitions

- Ordering guaranteed within a partition - all messages inserted in a partition are consumed in order of insertion

- One partition assigned to one consumer but one consumer can handle multiple partitions

Commit & Data Deletion

Commit: How do you tell Kafka that you have processed a message? You don't delete messages here (like in SQS). What you do is you say "hey I've read till this point" or "I commit till this message" so next time when a consumer from that consumer group wakes up, it gets messages from that point onwards.

Auto commit configuration is available, but the goal is you commit after you're done processing (or before, as per what you are building).

Data deletion: Because there's no delete API, Kafka has a data deletion policy which says "I'll delete a message older than 7 days, 14 days, 30 days" - you configure your retention period.

You need to know:

- How many messages you're receiving

- Your storage capacity

- How long it would take to fill storage

- Then decide retention policy

You expect your consumers to process messages within the retention period. So you set retention period with buffer - if you expect processing within 14 days, set retention to 30-40 days.