Communication - Polling, Websockets & SSE

Communication

Okay, so I assume you know HTTP request. Of course, you know HTTP request. Of course.

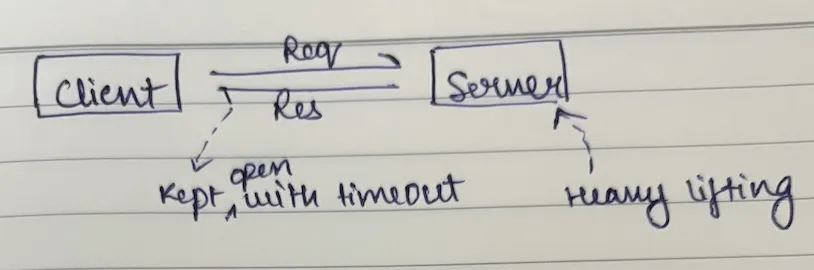

The usual communication is request-response paradigm where your client sends a request, server understands what client is expecting and sends the response. That's the lifecycle of an HTTP request.

The usual communication is request-response paradigm where your client sends a request, server understands what client is expecting and sends the response. That's the lifecycle of an HTTP request.

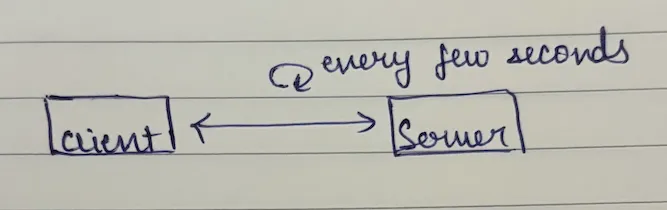

Short Polling

Let's discuss the first paradigm - short polling.

Short polling is when you create an EC2 instance and you keep refreshing the page to see what's the current status. That's short polling. Either you refresh or your JavaScript does it, it doesn't matter. But you're periodically polling the status and it's giving you the current status of it.

What's the current status? Whatever the current status in the database, it sends you that. That's short polling.

It's like checking your food delivery status. You keep opening the app every few minutes - "Order confirmed", "Being prepared", "Out for delivery", "Being prepared", "Out for delivery", "Delivered!" You are constantly asking for the current status! That's short polling.

It's like checking your food delivery status. You keep opening the app every few minutes - "Order confirmed", "Being prepared", "Out for delivery", "Being prepared", "Out for delivery", "Delivered!" You are constantly asking for the current status! That's short polling.

Examples:

- Cricket score refreshing - short polling

- Checking server is ready - short polling

Disadvantages of Short Polling

Here we can start seeing the disadvantages. The disadvantage is too many HTTP calls! Of course that is a problem. But not always! Most use cases are okay polling for data, you don't need to optimize for everything. Optimize for simplicity! Be very pragmatic and practical with your implementation!

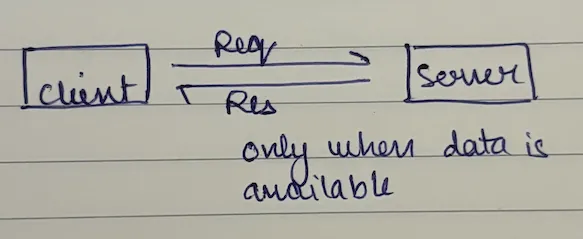

Long Polling

Next up: long polling! Opposite of short polling: long polling!

So in short polling we sent a request and got a current status and response but we used to poll very frequently. Long polling says that you make a request and if there is a change in data then only I'll send you the response. That's long polling.

For example, imagine you're waiting for a pizza delivery. With short polling, you keep calling the restaurant every 2 minutes: "Is my pizza ready? Is my pizza ready? Is my pizza ready?" They keep telling you "still cooking, still cooking, still cooking, ready for pickup!"

With long polling, you call once and say "call me back when my pizza is ready." You make one call, and the restaurant only calls you back when there's actually something to tell you - "your pizza is ready!" You wait with the phone line open until they have news. That's long polling.

Short Polling vs Long Polling

Short polling vs long polling:

- Short polling - sends response right away

- Long polling - sends response only when done, connection kept open for the entire duration

Example: EC2 provisioning

- Short polling - gets status every few seconds

- Long polling - gets response when server ready

How Long Polling Works

Think of implementation, this is precisely the code that you would write. Short polling and long polling is not some magic thing that happens, it's how you code, it's not a protocol. It's what you code, how you define your user experience to be.

Imagine if you are doing an EC2 server starting - you register the request, async workers continue to pick up and start processing. You keep firing the request on your UI to see what's the current status? What's the current status? What's the current status? And it becomes active. And that's when the polling stops. That's short polling.

In case of long polling you make a request and one of the ways to implement this is the request, the API server is checking the database. The current status was starting, it turned active. Is it active? Is it active? Is it active? Is it active? So your long polling internally might be implemented as a short polling. That's one of the ways to implement.

Or your long polling might have some reactive database like Redis or some other database that can tell it that status has changed, however you want to implement. But the thing is from an end user perspective how many API calls are going. That's what differentiates a short poll to a long poll.

The idea is: From your client's perspective are you making periodic calls or are you making one call and it responds only when the data has changed.

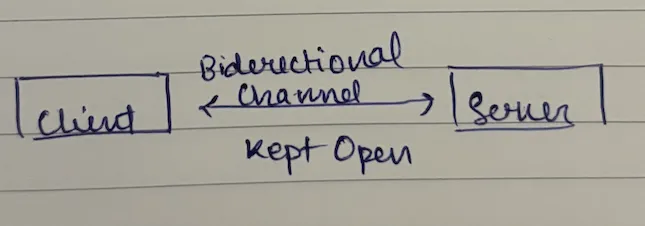

WebSockets

So WebSockets are essentially bidirectional channels, is the best way to put it. So the whole idea is your HTTP request response paradigm is actually a client-server paradigm. It's actually a request-response paradigm where your client sends a request. It says what it needs to be done. Your server understands that "okay, this is what client is expecting" and it sends the response. And that's the lifecycle of an HTTP request.

But what WebSockets say is this: What if my server can proactively send data to client without client asking for it? Again, opposites. So either your client always asks for data and then only server sends it or server proactively sends the data to the client. That is WebSocket.

Everything is TCP based. So TCP doesn't have a concept of timeout. It's HTTP and other protocols that define timeout - actually HTTP also does not have timeout concept. It's a web server which implements a timeout. So TCP as a protocol does not have a concept of timeout. So connections are always kept open. It's a load balancer which terminates the connection or closes the connection after a certain time period.

Everything is TCP based. So TCP doesn't have a concept of timeout. It's HTTP and other protocols that define timeout - actually HTTP also does not have timeout concept. It's a web server which implements a timeout. So TCP as a protocol does not have a concept of timeout. So connections are always kept open. It's a load balancer which terminates the connection or closes the connection after a certain time period.

WebSocket is just a communication paradigm or a protocol that sits, that is implemented on top of TCP. Once the connection is set up it's kept alive and your server, when the connection is kept open, your server can proactively send data to the client rather than client saying "hey give me the latest message", server can proactively send the message to the client "hey you received this message". That's the whole idea.

WebSocket Applications

Classic examples of WebSocket:

- Chat applications - that's the hello world of WebSockets, that's what you find on the internet filled with tutorials

- Stock market ticker apps like Robinhood or Zerodha - they use WebSockets to send you the market ticks (by the way fun fact: they receive market ticks on UDP, they don't receive it on TCP from the stock exchange. They receive it on UDP but then they send it to you over TCP over WebSocket because that's more reliable)

- Live experiences - for example let's say two people are reading the same blog, one person claps, other person sees the claps. You went live on Instagram, one person hearts and you see the hearts floating on your screen

- Multiplayer games - again all can be built with WebSockets

Advantages:

- Real-time data transfer

- Low communication overhead

Server-Sent Events (SSE)

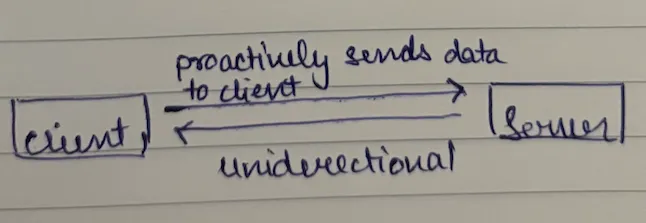

What's opposite of bidirectional? Persistent bidirectional communication vs persistent unidirectional communication - so that's when you get server-sent events.

It is literally your persistent connection but here your client establishes a connection with the server and your server only sends - your client will not further send data to the server, it's just that client has set up this connection to get server sent events.

For example again stock market ticker - Zerodha uses WebSockets, they don't use SSE but you can build a stock market ticker with server sent events.

SSE Applications

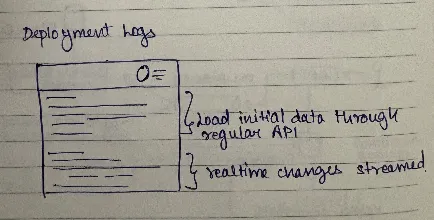

A good example of that is deployment log streaming. Imagine you trigger a deployment on a web interface and the deployment is happening on a server and that server is doing the deployment but the deployment log needs to be streamed to your user. So essentially when a deployment is happening, log is getting written on a log file on the server but you need to see it on your web UI.

This can be implemented with server sent events - client connects and tails the file and keeps sending each line to the client. So deployment logs streaming from your deployment machine to your frontend can be done with server sent events.

Again it can also be done with WebSockets. But then why if everything can be done with WebSockets (I get bidirectional), why do I need server sent events?

Why SSE over WebSockets?

Because server sent events are much lighter. So the protocol of WebSocket is heavy. When I say it is heavy, it means that each message - let's say you want to send a message "hello" from one side to another - the metadata payload is too much because it has to handle bidirectional communication: who sent, who is receiving, what's the payload and what not. That's heavy.

In case of server sent events it is actually four times more efficient. So the idea of server sent event is it literally sends the data that you need to send, no metadata overhead, bare minimum metadata overhead. So that's why it is much more memory efficient but what it gives up on is that your client cannot send anything to the server after you implement this.

Other places where you see server sent events:

- Updates on Twitter feeds - where you are on Twitter and you are scrolling and then you see "5 new posts", "36 new posts" - that is implemented with server sent events

- The entire world of LLM that has now sprung up - they are all just server sent events, they don't use WebSockets because it's just that your client doesn't have to do any communication once you invoke a request

For example, you invoke a request saying chat.complete - that classic API. This HTTP connection is what you have, you just set stream=true and as and when the tokens are generated, they keep streaming tokens to you and here there is no further communication from client. You are just a viewer, you are not doing anything, you are just watching it doing its thing. So this is server sent events.

You can also do it with WebSocket but everybody has chosen to use server sent events because it's much more efficient.

How Real-time Apps are Generally Structured

First, a myth that everybody has - a lot of people think that when I have a persistent connection I will get everything on the persistent connection. For example, think of messages. You think of Slack. You think every message I receive will be on WebSocket. Hey bhai who said that? No, it's not like that.

**_Only real-time updates will come over the persistent connection.

**

Think about it. When you load Slack, when you load your messaging app, what happens?

- Load the initial interface

- Load initial data - that can happen over REST, no problem there

- Subscribe for real-time updates - only the real-time updates that should come to you come over the persistent connection

In case of your deployment log, you load the initial interface, then you get your initial data over REST API, and then you subscribe for real-time updates that come over here. That's how most systems are built.

You don't have to send everything over WebSockets. Super general statement! Most people think all messages are sent the same way. Like that over-generalized statement: "Writes go to Master, Reads go to Replica" - over-generalized statement without any context. Same thing goes over here. Not all messages are sent over WebSockets.

You can do your initial load over REST API and then just subscribe to real-time changes over WebSocket. This is how most systems work:

- Stock market ticker - your interface is loaded, your stocks are loaded (initial value), and then the real-time updates come over WebSocket

- Chat applications - initial messages loaded via REST, new messages come via WebSocket

It doesn't mean that every single thing would come over WebSockets.

How to Choose Between Communication Patterns

Yeah, so I wanted to understand how do we choose one over the other - short polling, long polling, WebSockets? It's all about experience! They're all differing in product experience - how users see your product.

Product Experience Matters

Imagine a chat application. You and me chatting - if it does short polling (let's say short polling is done every 5 seconds). Imagine after 5 seconds I get 100 messages and then nothing, and then 2 messages. Poor user experience!

So that's why you should ideally do it over WebSockets because as and when you get messages, the messages are sent to you. So think of product experience, user experience - this is the buffet served to you, decide what you want to use when.

Trade-offs to Consider

Q: In the long polling example, the connection timeout was 10 seconds. But how does the server check the status - are you querying the database continuously? Won't it put load on the database?

A: That's the trade-off of users making multiple calls vs you making multiple DB calls. If you are not doing it, then users would keep refreshing the page. The number of queries on the database would remain the same - either you do it or users do it, or the JavaScript code does it. You decide the complications you would want to go for.

Again, the core thing is how you want to craft the user experience.

Resource Considerations

Q: In case of WebSocket, more resources on the server are utilized, right?

A: Of course, that would depend. Everything is hardware. Are you okay with spending so much cost on persistent connections because the number of servers would be larger? You would need more servers. All of these factors need to be considered.

Q: In short polling, the network bandwidth would be more utilized than server resources because you are making frequent calls?

A: Yes, but then if it's easier to implement and your users are okay, go for it. There is no one right way to do it.

Decision Framework

So basically use case to use case? Yes!

Key factors to consider:

- User experience requirements - how real-time does it need to be?

- Implementation complexity - what can your team handle?

- Resource costs - server resources vs network bandwidth

- Scale - how many concurrent users?

- Infrastructure - what's easier to deploy and maintain?

Remember: There is no but right way to do it. Optimize for simplicity first, then optimize for user experience when you need to.